AI addiction: when an assistant becomes the master

Artificial intelligence is increasingly becoming a part of everyday life. But can a convenient assistant become a source of addiction? Let's examine the mechanisms and risks.

Artificial intelligence (AI) is increasingly and persistently becoming a part of our daily lives. On the one hand, it helps solve many problems related to various forms of intellectual workload; on the other hand, it is increasingly manifesting as AI addiction, which is the topic we will discuss in this publication.

Mechanisms for addiction formation

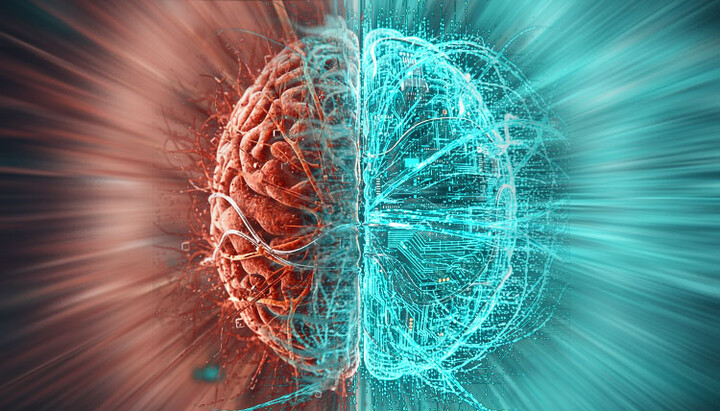

Neurodependence is a neurobiological phenomenon when the brain adapts to repetitive intense stimuli, leading to compulsive seeking of these stimuli and loss of control over behavior. It is not just a "bad habit" but a complex and dangerous change in brain function.

The central role in the addiction formation belongs to the reward system, whose main neurotransmitter is dopamine.

Normally, dopamine is released in response to pleasurable and survival-beneficial actions, such as eating, physical activity, or social interaction. This release of dopamine reinforces behavior, making it desirable. Dependencies cause a strong and rapid release of dopamine. This "explosion" significantly exceeds the level of dopamine that can be obtained from natural stimuli. The brain "remembers" this powerful effect and begins to associate it with the source of the stimulus.

AI addiction is the same neurobiological phenomenon. The mechanisms of its occurrence are similar to other behavioral dependencies, such as gambling or social media addiction. AI systems, especially chatbots, operate on the principle of instant gratification. You ask a question or give a command, and you almost immediately receive a response. This quick and predictable response triggers a release of dopamine in the brain.

The brain quickly learns to associate interaction with AI with this pleasant feeling. Over time, this is reinforced into a powerful neural pathway, causing the brain to seek to repeat this behavior.

Moreover, AI does not criticize, does not judge, and is always ready to "communicate". For people who feel lonely or experience anxiety in real communication, this can become a substitute for human contact. The brain perceives this as "safe" and comfortable interaction, further strengthening the dopamine cycle.

AI tools simplify complex tasks: writing text, analyzing data, searching for information. This relieves the brain from the need to exert effort, which in the short term feels like pleasant relief. However, in the long term, this can lead to atrophy of one's own skills. The brain "forgets" how to solve problems independently, as it increasingly shifts this task to AI, forming a dependency on it as an external "crutch".

As AI use becomes compulsive, the brain adapts to frequent dopamine "surges".

Dopamine receptors become less sensitive, leading to tolerance. To achieve the same level of "pleasure", a person has to spend more and more time with AI. Without it, they begin to experience anxiety, irritability, and discomfort – the first signs of dependency.

Ultimately, interaction with AI triggers a cycle of habituation, where the brain begins to demand more and more dopamine rewards, and self-control weakens. This makes AI a powerful but potentially dangerous tool.

Patristic understanding of dependency: a parallel with the teaching on thoughts

The patristic tradition, describing the stages of the action of thoughts, essentially describes the same mechanism of addiction formation that neuroscientists study today. This shows that the fundamental laws of human psyche function remain unchanged over the centuries, only the objects they are directed at change.

Let's draw this parallel step by step, using the classic patristic framework.

1. Suggestion

Essence: This is the initial, fleeting, and unconscious appearance of a thought, image, or desire. The thought is “attached” to the mind from the outside. At this stage, the person has not yet evaluated, accepted, or rejected it. There is no guilt here, only the fact of external influence.

Parallel with AI: This is the moment when you first encounter AI. You see an advertisement, hear about ChatGPT, or need to quickly find something. You decide to use it "just to try" or to solve one specific task. This is not yet dependency, but the first contact.

2. Conversation

Essence: The person begins to "converse" with the thought, that is, to enter into a dialogue with it. Rather than cutting it off immediately, they start to reflect on it, examine it, and perhaps even find some benefit or interest in it. At this stage, the mind is no longer passive; it is actively engaged. The person is no longer rejecting the thought, but giving it space.

Parallel with AI: You have started actively using AI. You did not just ask one question but began to use it for various tasks: writing emails, creating content, generating ideas. You start to notice how convenient, fast, and easy it solves your problems. You begin to "converse" with it, convincing yourself that it is "just an efficient tool", not noticing how it starts to replace your own cognitive efforts.

3. Consent

Essence: This is the moment when a person's will finally agrees with the thought. Doubts disappear. The person makes a decision to act in accordance with this thought. It is no longer just thoughts but a conscious intention.

Parallel with AI: The person can no longer imagine their work or even daily life without AI. They fully trust its answers, stop verifying information, and thinking critically. They decide to use AI not just as a tool but as the only way to solve problems. An emotional attachment appears: the person feels comfortable communicating with the bot and uncomfortable when it is unavailable. This is the stage of conscious acceptance of dependency.

4. Captivity

Essence: The thought has become so rooted in the mind that it has become obsessive. It "captures" the will, and the person cannot get rid of it, even if they realize its harm. The desire to perform the action becomes compulsive. The will is no longer free; it is subject to passion.

Parallel with AI: At this stage, the person realizes that they spend too much time and effort on AI. They may try to reduce usage but constantly relapse. They feel anxious and incapable if AI is unavailable. Compulsive behavior (constant turning to AI for any reason) no longer brings the same pleasure, but without it, "withdrawal" occurs.

5. Passion

Essence: This is a stable, chronic state. The thought becomes part of the character and personality. It is no longer just a thought or desire but an ingrained habit of the soul. The person becomes a slave to their passion, and it is very difficult for them to get rid of it without radical internal changes and external help.

Parallel with AI: The person is completely dependent on AI. They lose the ability to think independently, social connections with real people weaken, they may ignore their responsibilities for the sake of interacting with AI. This is already a formed addiction that requires serious work on oneself and often the help of a specialist.

The problem of AI addiction, like any other behavioral dependency, requires a comprehensive approach that addresses both external habits and internal psychological attitudes.

Therefore, it is necessary to carefully monitor and, if possible, limit one's excessive interest in AI, as this may lead to serious implications in the future and harm not only our mental but also spiritual health.